This website uses cookies

We use Cookies to ensure better performance, recognize your repeat visits and preferences, as well as to measure the effectiveness of campaigns and analyze traffic. For these reasons, we may share your site usage data with our analytics partners. Please, view our Cookie Policy to learn more about Cookies. By clicking «Allow all cookies», you consent to the use of ALL Cookies unless you disable them at any time.

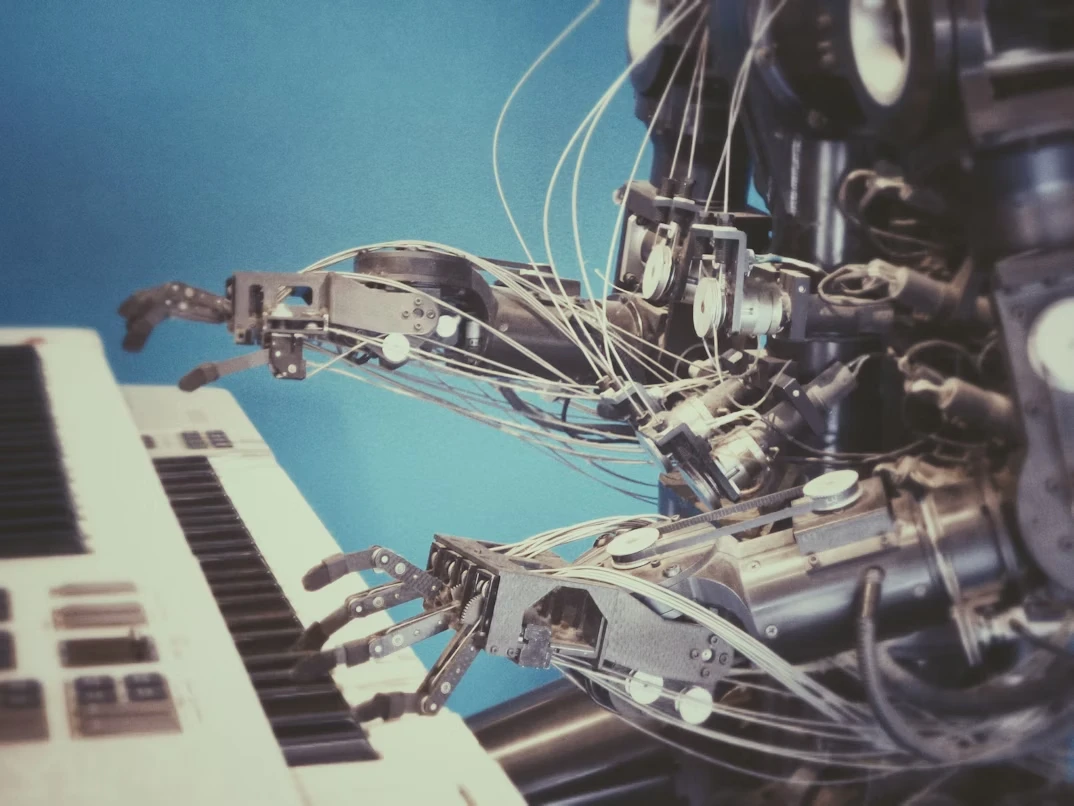

Every day we think, reason, communicate with each other and this is normal. After all, we are sentient beings. But now in this world, not only us have the ability to think, but also yet another human creation – artificial intelligence (AI).

A Few Words About The Artificial Intelligence Definition

So, what is AI? Artificial intelligence has enabled computers to learn with the help of a teacher as well as from their own experience. Neural networks can quickly adapt to the enormous volume of new parameters and perform tasks that they couldn’t handle before.

AI, just like everything in our reality, has its own life cycle. For the first time, the world spoke about it in 1956. Then it stayed in the shadow for several decades. In the modern world, neural networks are undergoing a rapid evolution, so its capabilities are increasingly approaching human ones. Although AI is still very young, it has already managed to show its huge potential and almost unlimited possibilities.

Let’s sit in a fictional time machine and go on an exciting journey through the most important and interesting stages of AI development.

1. The First Mention Of The “Three Laws Of Robotics”

In 1942 the famous science fiction writer Isaac Asimov first formulated “Three basic laws of robotics” in his work “Runaround” :

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

The story “Runaround” tells us the history of a robot called Speedy, which found itself in a situation where the third rule completely contradicted the first two. It was one of the first works which made the world think about the appearance of machines endowed with intelligence. Today, AI developers apply Asimov’s laws.

2. “The Game Of Imitation” From Alan Turing

In 1950, Alan Turing, an influential researcher, asked: “Can machines think?” Turing developed a belief system for assessing the intelligence level of artificial neural networks. This system makes it possible to answer one of the important questions – can machines be considered reasonable if they are able to imitate human behavior.

The “Imitation game” based on these considerations was created, which was later called the Turing Test. The essence of the test is that the researcher must determine who he had correspondence with – a person or an artificial neural network.

3. Conference At Dartmouth College

Prior to this conference, scientists had already formed the concepts of neural networks and natural language, but there was no universally accepted term defining the broad concept of machine “mind”. In summer 1956, a scientific conference was held in Dartmouth, where a young and quite ambitious scientist John McCarthy coined and defined the term “artificial intelligence”. We can say that since that day AI has become a field of research.

4. Frank Rosenblatt’s Perceptron

In 1957, a promising psychologist Frank Rosenblatt created the “perceptron”, which was essentially the first primitive mechanical neuron, and called it Mark 1 Perceptron. The device was an analog neural network, which consisted of a grid of photosensitive elements, and they were connected by wires to functional units (electric motors and rotary resistors). The “Perceptron Algorithm” developed by Rosenblatt makes it possible to manage and train the AI.

Scientists had long been arguing about the importance of this invention, but the time cleared up any confusion, as it was Mark 1 that gave start to all subsequent representatives of modern deep neural networks.

5. The First Difficulties

For more than a decade impressive investments were poured into the study of AI and no one really asked for the results of the work. Some scholars, not wishing to part with good funding, often exaggerated the need for their research.

2 Documents Changed Everything:

1966 – the ALPAC report for the US government;

1973 – Lighthill report for the British government.

Each of them contains an objective assessment of research in the field of artificial neural networks and a rather pessimistic forecast regarding the development of “smart” machines in the future.

As a result, the governments of both countries began to significantly cut investments and set strict limits on the timing and expected results of research. This led to stagnation in the field of AI development, which lasted until the end of the 80s.

6. Expert Systems

The end of the 80s may seem successful for AI at the first glance. With the advent of “expert systems” that stored huge amounts of data and were able to imitate the human decision-making process.

The technology was created at Carnegie Mellon University for Digital Equipment Corporation and very quickly it spread to other large companies. But in 1987, the success of expert systems began to decline rapidly with the pull out of major equipment manufacturers.

The crisis hit. Money injections on developments in the field of artificial neural networks were again suspended, and the term “AI” was abolished and replaced by “computer science”, “machine learning” and “analytics”.

7. Artificial Neural Network vs Kasparov

Public opinion about AI improved in 1997, when IBM’s Deep Blue computer defeated grandmaster Garry Kasparov in the chess game. The match took place live. Of the six games held, three ended in a draw, the computer won two games and Kasparov won just one. Before that Kasparov competed with the previous version of Deep Blue and won.

Deep Blue used the “brute force” method during the game. A person can calculate about 50 possible moves ahead, while the computer processed almost 200 million options per second. It is difficult to call it a deep neural network because it used ready-made algorithms and did not have the ability to learn. But this event raised the value of IBM shares by $10 million.

8. Start Of The Google Brain Project

From 1997 to 2011, scientists all over the world worked to create artificial neural networks similar to the human brain. Google programmer Jeff Dean and Stanford University professor of computer science Andrew Yan-Tak Ng came closest to this goal. These eminent people set out to create a large neural network, backed by the power of Google’s servers.

The created program used the resources of 16,000 servers. The essence of the experiment was that the system processed 10 million random frames and videos from YouTube. Researchers did not ask the network to give out any specific images. This was done intentionally. When the AI is not given any specific parameters, it tries to find patterns and formulate classifications without a teacher.

Data processing lasted 3 days and as a result the system produced 3 visual images: the face and body of a person, and the image of a cat. This remarkable experiment was a breakthrough in the training of deep neural networks using computer vision without a teacher, and also served as the beginning of the Google Brain project.

9. A New Stage In The Development Of AI

In 2012 professor at the University of Toronto, Geoffrey Hinton, together with his two students created AlexNet. This is a neural network for computer vision which took part in the ImageNet Image Recognition Competition. Systems had to recognize images with the least error. The competition was won by AlexNet. It showed a result of 15.3% of errors, while competitors had at least twice as many errors.

The winner of the contest showed that deep neural networks based on GPUs can distinguish and classify images much better than other systems. The victory of the mechanical “brain” sparked interest in AI developments once again, and in 2018 Geoffrey Hinton received the Turing Award.

10. AlphaGo vs Lee Sedol

In 2016 a momentous event took place – the victory of AlfaGo over Lee Sedol. It all started in 2013, when scientists from DeepMind published an article that AI had learned 50 old Atari games and learned how to win. Google bought the company very quickly, and after a few years, DeepMind developed the AlphaGo neural network, which switched from Atari to the Japanese game Go.

The abilities of this program exceeded all expectations. It not only played several thousand games with its previous versions, but also learned from its defeats and victories and in 2016 beat the famous Go player Lee Sedol with a score of 4:1.

11. Artificial Intelligence Becomes A Doctor

Yes, this is not a game of chess or go. 2017 was truly a breakthrough year. The whole world seemed to be obsessed with perfecting artificial neural networks. For instance, AI learned to explore and classify bacteria, distinguish red blood cells. Are you surprised? But this is just the tip of the iceberg.

Zebra-Med’s neural network learned to diagnose various diseases. The Chinese robot, endowed with artificial intelligence, received a doctor’s license and now, like the representatives of Homo Sapiens, keeps the Hippocratic oath. It is amazing and really essential for people.

12. AI Showed A Level Of Intelligence Higher Than Human And Made A Movie

Recently the history of AI has developed over the years, but now computers and programs are getting smarter every month faster. For example, on January 15, 2018, the AI passed the exam for reading and understanding the text and showed the result better than a human. The Stanford test is recognized as the best way to determine the level of intelligence. Artificial intelligence showed a result of 82.6%, while the best result of a person – 82.3%. It seems to be a little gap of 0.3%, but this is actually a huge gap between man and machine. Now neural networks can be easily implemented in all industries, as it can improve all business processes and completely replace a person.

In the same year, a network, calling himself Benjamin, created his own short film in 48 hours. The video “Zone Out”, created in black and white, is very similar to a horror movie.

Nowadays, artificial networks are helping scientists to develop modern quantum computers.

Conclusion

Although the history of AI has been around for almost 80 years, it is still quite young. Today, no one is surprised by the machines that treat people or make movies. But this is only the beginning and artificial networks will soon open up truly limitless possibilities.

Our company has experience with artificial intelligence and neural networks, in particular with speech recognition technology. We are glad to help you in developing and improving similar projects. Fill out the contact form and start putting your ideas into action today.